Globally there are almost 4,000,000 podcast shows and more than 60,000,000 episodes available. Two new podcast episodes are published every minute: conversations, interviews, documentaries, fiction, and stories that are all there to be listened to. Because of this abundance of content, consumers are missing out on a lot of “hidden gems”, and podcasters and publishers are losing the opportunity to connect with new audiences.

Unfortunately, audio is an inaccessible medium by nature. It’s inaccessible by people with hearing impairments, by people who may not be fluent in the podcast recording language, but also by search engines: even if an audio file is tagged with metadata that can be indexed in a search engine, its actual content remains hidden to the listeners and it’s impossible to preview.

Audio is a black box, and this is true even for a medium that in recent years has seen the fastest-growing audience: we are talking about podcasts.

Just pick your podcast player of choice and try to search for a specific topic you are interested in, like your favorite artist, a new tech thing, an event, or a city… in most cases, you will be disappointed by the results. Podcast search engines rely exclusively on what's available, which are titles and descriptions.

And even when by chance you find a title or a description that might match your interest, you’ll be unable to preview the podcast’s content at a glance. Podcast media players generally allow you to play the audio at 2x speed or more but it’s not enough to get an idea of what the actual content is and whether it’s worth listening to.

Just like with music, the issue is the lack of data: the “core” of the content — the speakers and what they discuss inside each episode — is inaccessible and impossible to preview.

At Musixmatch we have already tackled this issue on the music side, by transcribing lyrics: not only do lyrics create a deeper connection between artists and fans, but they also unlock a new discovery paradigm. Through our research, we observed that people had been searching for songs on their favorite music apps by typing the lyrics that they could remember but they were unable to find them because — unless they knew the exact song title and artist — lyrics were not indexed. Thanks to the integration of our database and search technology with all the top international music platforms, we have managed to solve this issue. Just go to your Alexa or Siri devices, and ask for “What’s the song that goes: feeling good never stressed”… and voilà!

Lyrics can also tell more information about a particular song. For instance, by analyzing lyrics we have built an AI algorithm that can understand a song’s mood (for which lyrics offer much more information than the beats or melody), their main themes, or even the things they talked about. Did you know that Gucci has been mentioned 22.705 times in songs over the years? Even for music, words matter.

Starting today, we are making thousands of podcast episodes accessible for the first time, with Musixmatch Podcasts. While this is just the first beta release of this product, we are already super excited about what’s to come.

Over the last year, the Musixmatch AI has been transcribing, indexing and categorizing the most listened-to podcasts across the world, creating a graph that ranks them by what is being talked about and who is participating in the discussion.

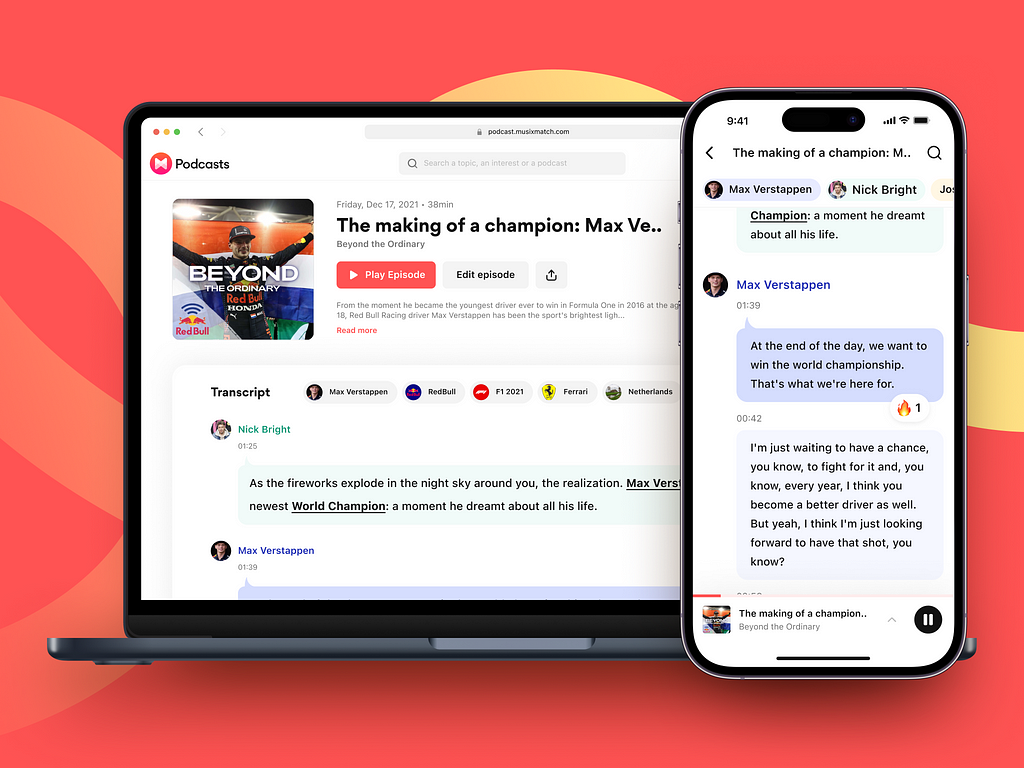

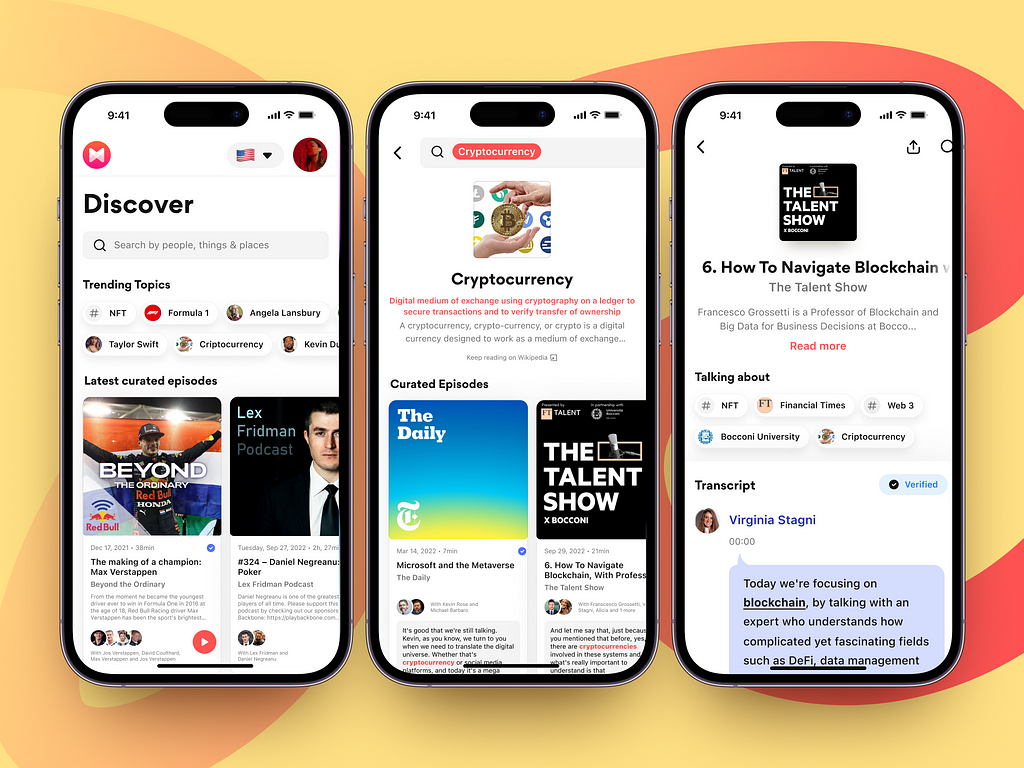

Every episode is fully transcribed and synchronized, with the speakers being tagged in every paragraph. This way podcasts are easier to be scanned, and listeners can jump to the parts they are really interested in and it's easier for them to "read through" the episodes: it’s just like watching a movie with subtitles.

We believe that this new experience opens audio up to a whole new range of customers that had been unable to enjoy it to this point: people with hearing impairments or those who aren’t fluent in the podcast’s spoken language. This is the most accessible podcast experience to date.

We want Musixmatch Podcasts to be a place where technology, podcast creators, listeners and communities of experts come together to create a new, enriched experience.

Our ultimate goal is to let anyone discover, enjoy and share great audio content without boundaries. This is achieved by “revealing” what’s behind the audiowave.

Thanks to AI, every day the most popular episodes are automatically fully transcribed and synchronized, with topics automatically identified, connected and ranked. However, artificial intelligence alone is great but it’s not enough to guarantee quality content. The accuracy is OK but not perfect, and it degrades whenever the names of people, organizations, or brands are mentioned.

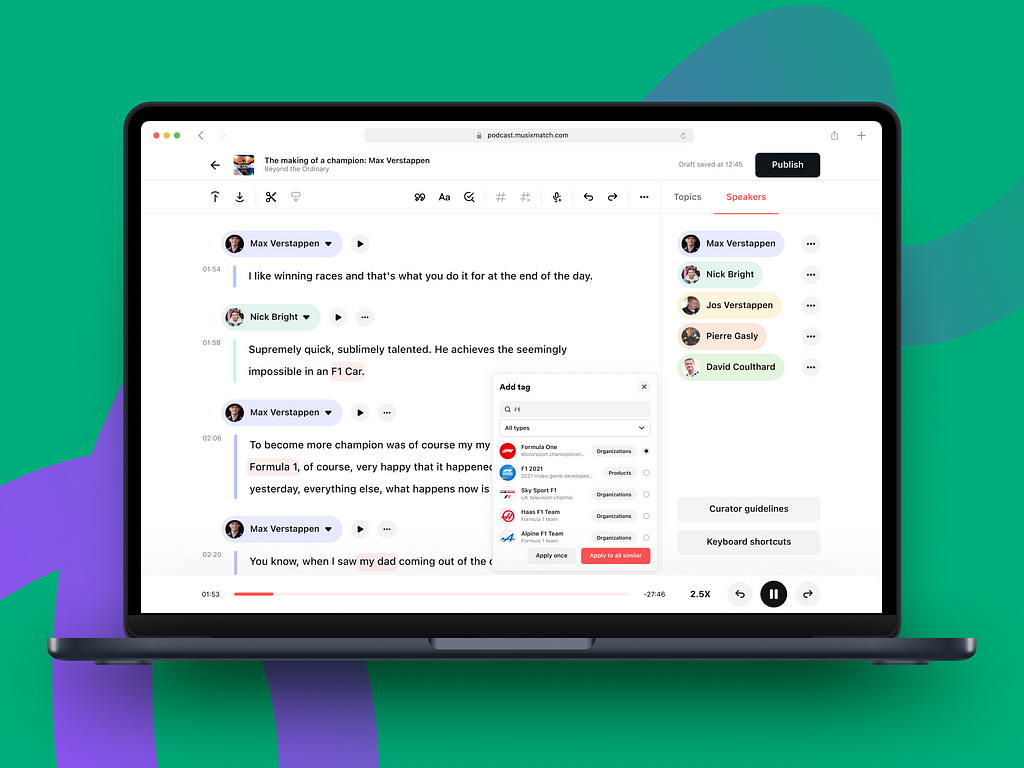

That’s why we have created an open environment where its Community of Curators and podcast owners can edit and curate AI transcriptions, using a dedicated online software accessible right from the website: the Podcasts Studio. Essentially our goal is to bring “podcasts subtitles” to a whole new quality level and to make them become universally adopted and accessible.

This is a very ambitious goal, and that's why we are calling content creators and community enthusiasts to help curate and verify that content, just like it was done for music lyrics. The outcome of this collective work will be verified, time-synchronized transcriptions, tagged with speakers and topics. Thanks to your contributions, for the first time, consuming audio can be navigated in a non-linear way — just like the web! — and is presented with a beautiful new user experience, enhanced by the additional metadata and accessible by everyone.

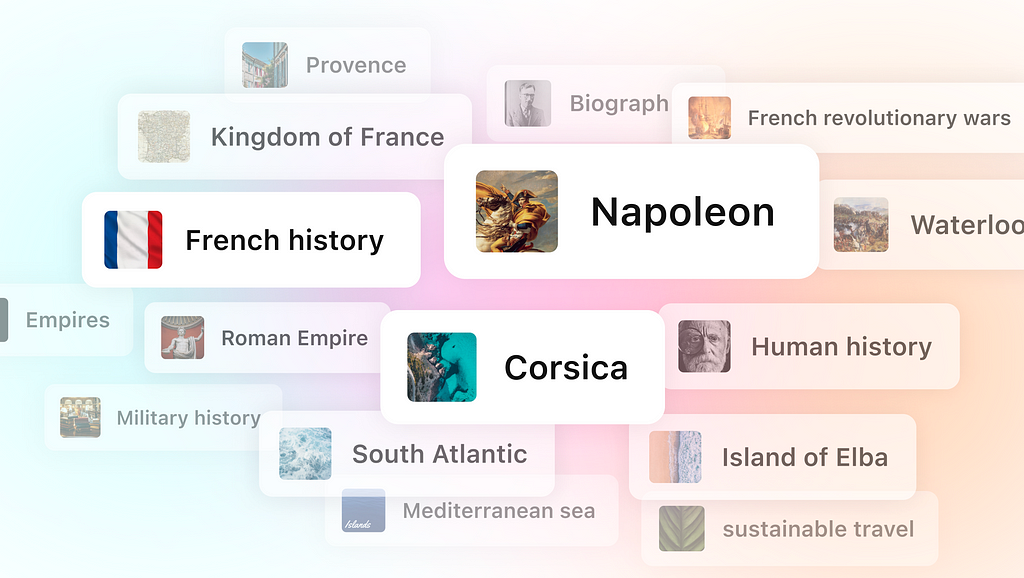

Following the steps of Umberto, Musixmatch’s NLP base model architecture, our AI can tag the keywords that are mentioned inside transcriptions, including people, things, places, and organizations. These topics (in technical terms referred to as entities) are connected directly to Wikipedia IDs so that they can be searched and universally ranked across all languages.

This analysis creates a graph, where podcast episodes are categorized based on the topics mentioned through their content and ranked based on factors like the number of times a topic is mentioned, or how authoritative the presenter is on those topics: we call it the TopicRank. Also, we are not simply clustering strings of data, but actual entities with individual IDs, so that even when searching for aliases will be clustered around the same topic.

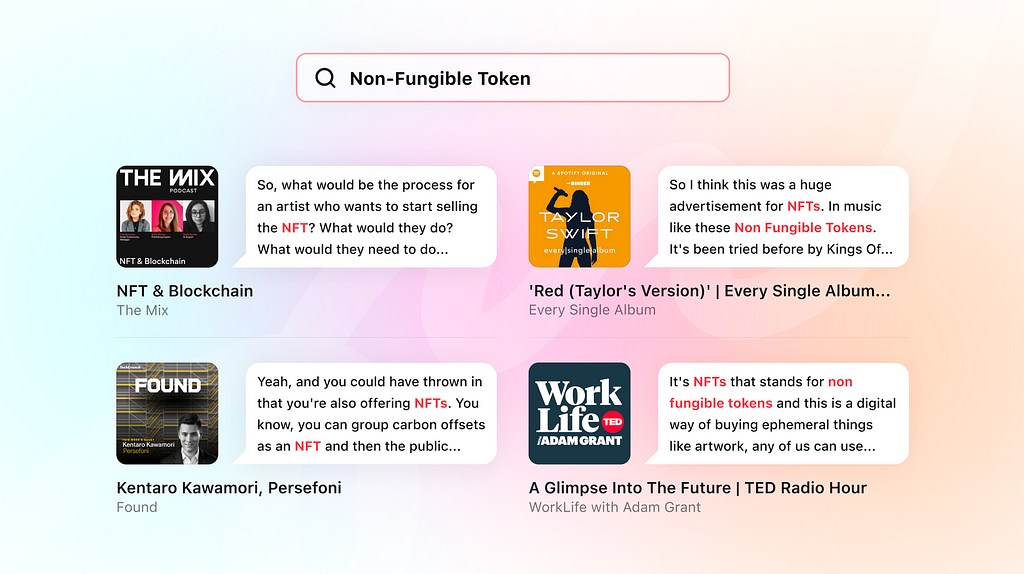

Thanks to this classification, people can search for any particular keyword and find transcribed podcasts that match their query, ordered by their relevance. Our search index returns an array of results that is much more detailed and in-depth than any other listening services that rely on standard RSS metadata and predefined genres and categories. We think that this can become an excellent tool for students, researchers and journalists who can search for specific topics in Musixmatch’s vast archive.

Furthermore, by searching for specific topics through transcriptions, the results provide time-synchronized snippets that include them, which allows users to access their topic of choice right where it’s being discussed. It’s like searching on Google, but for podcasts!

Artificial Intelligence alone is great but it’s not enough to guarantee quality content. If you have ever experienced automatically generated subtitles you probably notice that they are rarely perfect. The accuracy is OK but not perfect, and it degrades whenever the names of people, organizations, or brands are mentioned.

This is why we rely on the power of AI for analyzing content on a big scale — such as automating the execution of transcriptions and NLP analysis — but also on our expert community for guaranteeing quality and adding additional metadata.

To enable AI and human collaboration with podcasts, we have built a brand-new editing software, the Podcast Studio, which allows editors around the world to improve upon the data extracted automatically by artificial intelligence.

This software has been already extensively used by editors at Chora Media, the publisher of some of the most listened Italian podcasts.

"It's not about hearing, it's about listening.

Since we started Chora Media is focused on inclusion, integration and accessibility, giving a voice to a broad range of authentic narrations, by merging together different formats in a non-conventional way.

Thanks to Musixmach not only we can give access to our content to people that couldn't access it before, but also we can offer a deeper and more diverse user experience, in line with the depth that distinguishes our podcasts."

There’s so much great content “hidden” behind audio, and podcasts represent a formidable source of content that is meant to be shared.

The powerful thing about time-sync’d transcripts is that they give a form and a navigational structure to an audio file, and they can be used to give them a visual representation.

Using this data, we created the simplest way to share podcast content with friends and on social media. With just a few clicks, any user can share their favorite clip of a podcast with enriched text and speaker imagery directly to friends or via their social media feeds. We believe this will unlock huge potential for podcast content to be enjoyed like never before.

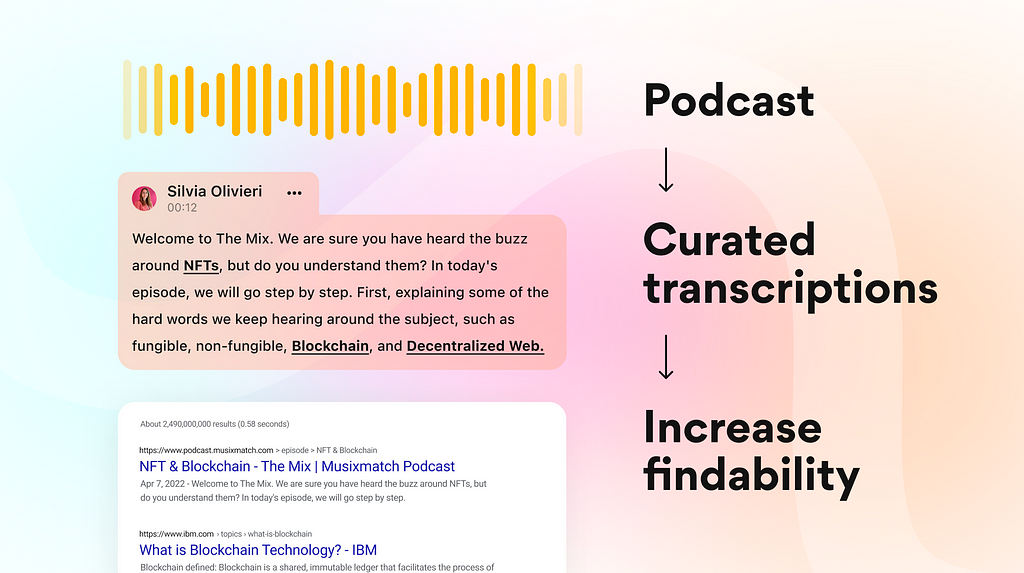

The Musixmatch Podcasts experience isn’t limited to its own website. Publishers and podcasters who claim their show can very simply take their episode transcriptions and embed them to their own web channels and apps. Embedded transcriptions are SEO friendly, so can be found by simply searching on Google.

We have already enabled this feature on the Financial Times Talent channels, and we are excited to bring it to more platforms.

“Our vision is to bring to our audience a useful learning tool through our podcast content. Giving access to a new interaction with our audio through the Musixmatch products is an exciting opportunity to offer a 360 degrees UX that touches not only ‘the listening’ but gives an holistic digital experience of our brand. We are a hub of innovation here at the Financial Times: Musixmatch is a disruptive partner to work with to experiment and take our products to the next level.”

With the launch of this public Beta, we are taking the first steps in what we believe will be a long journey ahead. It’s a journey where eventually the existing barrier between written content (text) and spoken content (audio) will be blurred. In fact, writing an article or recording it over audio will be pretty much the same.

However, that’s not how the world is today. Search engines can only crawl through written text. In other words, audio is invisible to Google. This means that businesses around the world invest hours writing articles that can help potential customers find them -just like we did with this article.

With Musixmatch Podcasts, we are starting the journey where both written and audio content can be indexed and tagged under the metadata ecosystem.

If this sounds interesting to you and you are either a large podcast publisher or an independent podcaster, we would love to talk to you. We have just begun opening up our Podcast Studio to the first batch of early users who are going to help us shape the future of podcast discovery and accessibility and we have an extremely exciting roadmap we would love to share with you.

“We are incredibly excited to put our 12+ years experience in music metadata to support the larger audio ecosystem, starting with Podcasts. While this is a very important milestone for us, this is just the beginning.

As technology matures, this analysis will enable a much wider range of features, like translations — which we already provide through our community for music: in the case of podcasts could be narrated by a synthetic voice -, content summarization — think of daily digests for your favorite topics -, and it will be instrumental to enable new interactions with smart radios.

Our vision is that in the near future from your car you will just ask about the latest and greatest about any topic, and that you will be able to listen to that content in your own language, in your desired duration, with the ability to hear more insights without effort.

The boundary between what’s written and what’s recorded is blurring, both ways.”

Going beyond lyrics: introducing Musixmatch Podcasts was originally published in Musixmatch Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.